Piercarlo Valdesolo, Department of Psychology, Claremont McKenna College

Piercarlo Valdesolo, Department of Psychology, Claremont McKenna College

January 2016 – The research my students and I conduct at the Moral Emotions and Trust (MEAT) Lab at Claremont McKenna College centers primarily on the influence of specific emotional states on social or moral judgment and behavior. My interest in this topic began early in graduate school when I first read Jon Haidt’s paper on the Social Intuitionist Model (Haidt 2001) and Josh Greene’s paper on emotional engagement in moral decision making (Greene, Sommerville, Nystrom, Darley, & Cohen, 2001).

These papers argued that intuition and emotion play a major role in shaping moral decisions and represented a paradigm shift in a field traditionally dominated by rationalist models (e.g. Kohlberg). As someone who had entered graduate school interested in exploring the nature and function of social emotions like jealousy and gratitude, I saw an opportunity to link these emerging views in moral psychology with emotion research. As a result, my earlier work focused on demonstrating the causal power of emotion in moral decision-making, specifically in the context of a dual-process model of moral judgment wherein intuitive and deliberative processes interact to predict moral judgment.

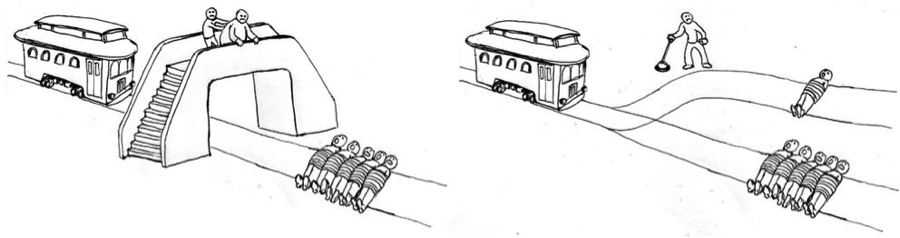

For example, in my first publication (Valdesolo & DeSteno, 2006) I reported the effects of manipulating participants’ emotional states prior to responding to the well-known trolley problems. These fictional problems have customarily been used to test the mechanisms underlying moral judgment, specifically the relative influence of intuitive and emotional processes compared to more deliberative and reasoned processes. In the standard trolley case, a runaway trolley is hurtling down the tracks towards five workmen. If it continues on its present course it will strike and kill these five men. Participants are then asked whether or not it would be morally appropriate for an observer to try and intervene to save the five workmen.

In the “switch” case, the observer is standing next to a large switch that would redirect the trolley onto a separate track where it would strike and kill just one workmen. In the “footbridge” case, the observer is standing next to a large individual on a footbridge overlooking the track and could save the five workmen by pushing this stranger into the path of the oncoming trolley, killing him while also blocking the train. In the switch case participants generally indicate that it would be appropriate to divert the trolley, saving the five and killing the one. But in the footbridge case, participants generally indicate that it would be inappropriate to kill the large stranger to save the five workmen.

In the “switch” case, the observer is standing next to a large switch that would redirect the trolley onto a separate track where it would strike and kill just one workmen. In the “footbridge” case, the observer is standing next to a large individual on a footbridge overlooking the track and could save the five workmen by pushing this stranger into the path of the oncoming trolley, killing him while also blocking the train. In the switch case participants generally indicate that it would be appropriate to divert the trolley, saving the five and killing the one. But in the footbridge case, participants generally indicate that it would be inappropriate to kill the large stranger to save the five workmen.

Josh Greene’s work had previously argued that participants’ switch from utilitarian decision-making in the switch dilemma (saving the maximum number of lives) to deontological decision-making in the footbridge dilemma (respecting an individual’s right not to be killed) was rooted in an intuitive/emotional aversion for the particular kind of action required in the footbridge case, namely pushing a large stranger to his death. It just feels wrong to push a stranger, and this aversion to direct harm trumps the utilitarian concern of saving more lives. But it doesn’t feel wrong to flip a switch, so utilitarian concerns win out in the switch case.

I took this general explanatory strategy for granted, and started exploring the effects of manipulating the decider’s baseline emotional state on the decision taken. In particular, building off insight from the affect-as-information model (Schwartz & Clore, 1996), I hypothesized that manipulations of emotional context could temper the intuitive/emotional aversion people have towards pushing a large stranger to his death, and consequently affect participants’ willingness to endorse harmful actions.

On the affect-as-information model, people consult their emotional states as cues towards how they should decide or behave, since their emotional states are assumed to inform them of the value of possible alternatives. This raises the possibility that emotions that emerge in one context may affect decisions in a completely unrelated context, potentially leading to inappropriate decisions. For example, the anger and frustration elicited by a long and difficult workday can spill over and lead to inappropriate behavior towards our spouse when we get home.

In line with this idea, I tested whether eliciting contextual positivity prior to making a moral judgment would bleed over into subsequent moral judgments and cause participants’ to be relatively less averse to the thought of directly harming a stranger. In short, we had participants watch either a neutral documentary film or an amusing Chris Farley sketch from Saturday Night Live prior to responding to the trolley dilemmas, and found that participants in the positive mood condition showed significantly greater proportion of utilitarian judgments than in the control condition. Giving people a feeling of positivity prior to judgment significantly increased their willingness to push the stranger (they didn’t feel as bad about it when consulting their emotional states), revealing the causal influence of emotion on moral judgment.

My subsequent work in this area explored the phenomenon of moral hypocrisy. Why is it that people so readily excuse their own or their in-group members’ immoral actions while condemning the identical actions of others? I suspected that it had to do with the overriding motivation to preserve an image of the self or one’s group as moral, and the effect such motivation has on reasoning about moral transgressions. In other words, our desire to see ourselves and our in-group as “good” no matter what motivates us to justify our failings, a courtesy we do not extend to the identical moral transgressions of others.

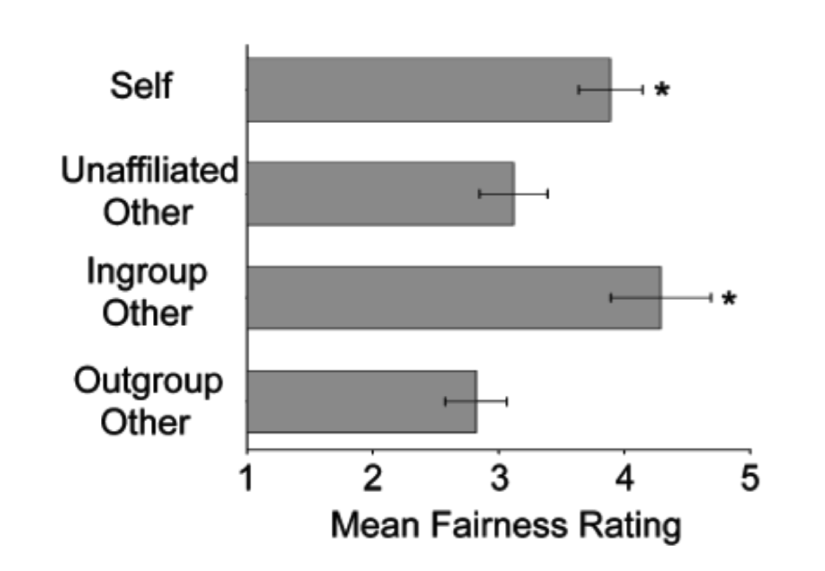

Across two studies we demonstrated that moral hypocrisy, instantiated when individuals’ evaluations of their own moral transgressions differ substantially from their evaluations of others’ identical transgressions, extends to the group level (Valdesolo & DeSteno, 2007) and is primarily driven by differences in consciously motivated reasoning when judging self vs. other (Valdesolo & DeSteno, 2008). In other words, hypocrisy exists both for judgments of self vs. other and for judgments of in-group vs. out-group and it results from a discrepancy in how we reason about transgressions and not how we feel about transgressions.

Though it may not be particularly surprising that people judge hypocritically across groups that are defined by long-standing conflict (e.g. liberals vs conservatives), we showed that this tendency towards self and in-group leniency exists even in minimal groups created in the lab (people wearing red wristbands vs. blue wristbands; see Figure 1).

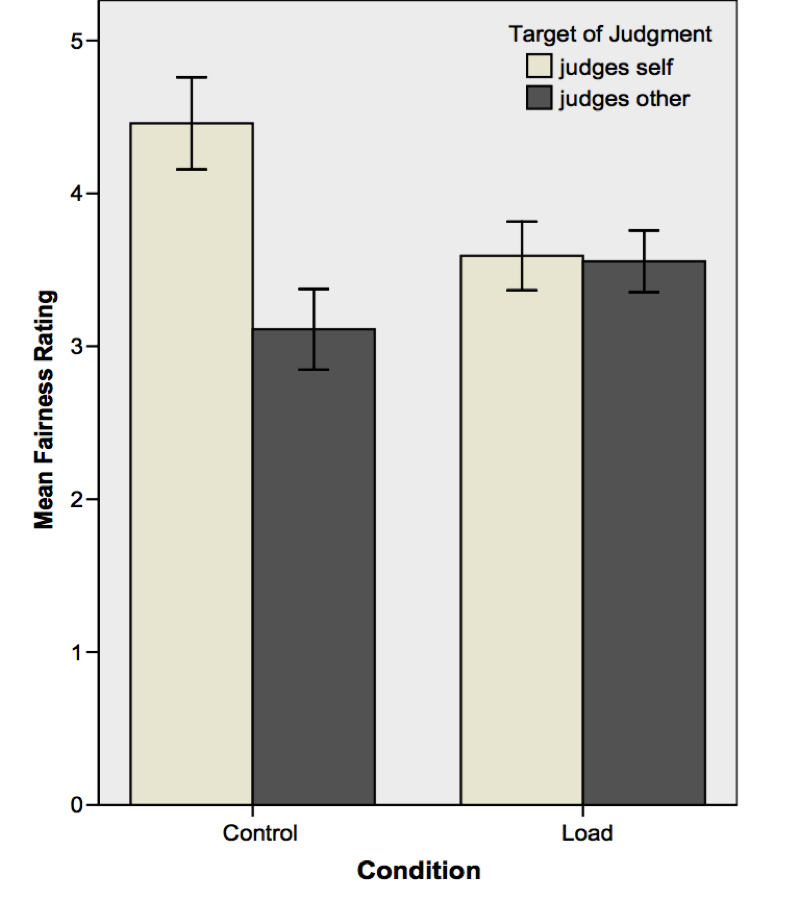

Furthermore, a dual process model of moral judgment leads to competing predictions regarding how hypocrisy might emerge. The first possibility is that hypocrisy could be driven by discrepancies in our intuitions about moral actions committed by different targets. We might have an automatic aversion towards unaffiliated and outgroup others’ morally questionable actions but have an automatic positivity bias when it comes to our own or our group members’ actions.

Alternatively, we might have automatic aversions to morally questionable actions regardless of the identity of the actor, but only engage in conscious justification and rationalization of our own and our group members’ transgressions. Manipulating cognitive load during moral judgment allowed us to tease apart these competing possibilities, and the data supported the view that hypocrisy is driven by differences in the degree to which we engage in motivated reasoning, as opposed to differences at the intuitive level (see Figure 2). In a nutshell, constraining people’s ability to reason about the moral transgressions eliminated hypocrisy.

More recently my work has focused on the structure and function of specific moral emotions. For example, in a study looking at the role of low-level cues towards similarity in triggering compassion and altruism, we found that merely moving in synchrony with a novel interaction partner in the lab (compared to moving asynchronously) was enough to elicit greater compassion and helping behavior when that partner was later in need (Valdesolo & DeSteno, 2011). In a study exploring awe, we supported previous research tying this state to the experience of feelings of uncertainty (Griskevicius, Shiota & Neufeld 2010; Shiota, Keltner & Mossman 2007).

Generally speaking, awe is thought to be evoked when in the presence of stimuli that are perceptually vast and that existing mental structures fail to make sense of (e.g. gazing at the stars and contemplating the vastness of the universe, witnessing the destructive force of a natural disaster; c.f. Keltner & Haidt, 2003). Such stimuli tend to trigger feelings of uncertainty and ambiguity, and motivate individuals to search for explanations and meaning. One means through which individuals satisfy these motives is a greater belief in the power of causal agents i.e. agency detection. In our studies, we demonstrated that awe decreases tolerance for uncertainty and increases belief in the power of causal agents (Valdesolo & Graham, 2014). This relationship between awe, uncertainty, and agency detection was reflected in both an increased belief in the power of supernatural forces (i.e. karma, God) as well as an increased tendency towards perceiving numerical digit strings as generated by humans, as opposed to being randomly generated.

While I continue to explore both the processes underlying moral judgments and the structure and function of specific moral emotions, I have also become interested in the relationship between recent dominant theories of morality and long-standing theories of emotion. Moral Foundations Theory (MFT) (Haidt and Joseph 2004; Graham, Haidt & Nosek 2009) suggests that morality can be explained through a set of distinct foundational intuitive concerns (e.g. harm, fairness, loyalty, authority, purity). These concerns are thought to be universal (though variably expressed across cultures) and the result of evolutionary pressures relevant to adaptive social living.

This perspective shares some theoretical roots with Basic Emotions Theory. BET argues for emotions as distinct and universal psychological processes shaped by evolution to respond to particular kinds of adaptive concerns. Just as BET defines emotions as distinct causal mechanisms that demonstrate consistent and specific relationships with inputs and outputs (e.g. innate and universal affect programs for disgust, anger, fear), MFT defines morality as resulting from specific correspondences between moral content and psychological experiences (e.g. innate and universal responses to violations of purity or loyalty). In short, MFT and BET share the view that understanding the evolutionary challenges faced by our ancestors can shed light on the emergence of, respectively, moral concerns and emotional responses.

As a result of this similarity, recent constructionist critiques of BET (Barrett 2006; Lindquist, Wager, Kober, Bliss-Moreau & Barrett 2012) have been coopted by moral psychologists to argue against the viability of the MFT approach (Cameron, Lindquist & Gray, 2015; Gray, Young & Waytz 2012). Constructionists in affective science argue that emotions are best described by general combinatorial processes (e.g. core affect and conceptual knowledge), as opposed to being discrete packages evolved as solutions to specific adaptive problems. Similarly, constructionists in moral psychology have described the processes underlying moral judgment as a combination of affective responses and conceptual knowledge relevant to moral concerns (e.g. core affect and knowledge about interpersonal harm), rather than as domain-specific mechanisms designed to solve distinctive moral problems.

I think this is largely a good thing for the state of theorizing in moral psychology, since the field has long lacked theoretical specificity regarding how emotions interact with moral concerns. However, these views can often either misconstrue the opposing view or portray only its most extreme version. Indeed, there seem to be ways to reconcile these competing theoretical approaches without the wholesale abandonment of one or the other (Scarantino & Griffiths, 2011; Scarantino 2012). I have recently advocated for moral psychologists to consider a version of these arguments (Valdesolo, in press). I very much look forward to seeing how this debate unfolds over the coming years.

References

Barrett, L. (2006). Are Emotions Natural Kinds? Perspectives on Psychological Science, 1(1), 28-58.

Cameron, C. D., Lindquist, K. A., & Gray, K. (2015). A constructionist review of morality and emotions: No evidence for specific links between moral content and discrete emotions. Personality and Social Psychology Review.

Griskevicius, V., Shiota, M. N., & Neufeld, S. L. (2010). Influence of different positive emotions on persuasion processing: a functional evolutionary approach. Emotion, 10(2), 190.

Haidt, J. (2001). The emotional dog and its rational tail: a social intuitionist approach to moral judgment. Psychological Review, 108(4), 814.

Haidt, J., & Joseph, C. (2004). Intuitive ethics: How innately prepared intuitions generate culturally variable virtues. Daedalus, 133(4), 55-66.

Graham, J., Haidt, J., & Nosek, B. A. (2009). Liberals and conservatives rely on different sets of moral foundations. Journal of personality and social psychology, 96(5), 1029.

Gray, K., Waytz, A., & Young, L. (2012). The moral dyad: A fundamental template unifying moral judgment. Psychological Inquiry, 23(2), 206-215.

Greene, J. D., Sommerville, R. B., Nystrom, L. E., Darley, J. M., & Cohen, J. D. (2001). An fMRI investigation of emotional engagement in moral judgment. Science, 293(5537), 2105-2108.

Keltner, D. & Haidt, J. (2003) Approaching awe, a moral, spiritual, and aesthetic emotion. Cognition and Emotion, 17 (2), 297-314.

Lindquist, K. A., Wager, T. D., Kober, H., Bliss-Moreau, E., & Barrett, L. F. (2012). The brain basis of emotion: a meta-analytic review. Behavioral and Brain Sciences, 35(03), 121-143.

Rand, D. G., Greene, J. D., & Nowak, M. A. (2012). Spontaneous giving and calculated greed. Nature, 489(7416), 427-430.

Scarantino, A., & Griffiths, P. (2011). Don’t give up on basic emotions. Emotion Review, 3(4), 444-454.

Scarantino, A. (2012a). How to define emotions scientifically. Emotion Review, 4(4), 358-368.

Schwarz, N., & Clore, G. L. (1996). Feelings and phenomenal experiences.Social psychology: Handbook of basic principles, 2, 385-407.

Shiota, M. N., Keltner, D., & Mossman, A. (2007). The nature of awe: Elicitors, appraisals, and effects on self-concept. Cognition and emotion, 21(5), 944-963.

Valdesolo, P., & DeSteno, D. (2006). Manipulations of emotional context shape moral judgment. Psychological science, 17(6), 476-477.

Valdesolo, P., & DeSteno, D. (2007). Moral hypocrisy social groups and the flexibility of virtue. Psychological Science, 18(8), 689-690.

Valdesolo, P., & DeSteno, D. (2008). The duality of virtue: Deconstructing the moral hypocrite. Journal of Experimental Social Psychology, 44(5), 1334-1338.

Valdesolo, P., & DeSteno, D. (2011). Synchrony and the social tuning of compassion. Emotion, 11(2), 262.

Valdesolo, P., & Graham, J. (2013). Awe, uncertainty, and agency detection.Psychological science, 0956797613501884.

Valdesolo (in press). Getting emotions right in moral psychology. To appear in Graham, J. & Gray, K. (Eds) Atlas of Moral Psychology.